Introduction

Ever since the 2005 DARPA Grand Challenge, autonomous vehicles (AV) have been a popular topic for research. In this blog, I will share how I leveraged lane detection using OpenCV and road segmentation by training an ML model using PyTorch. This research was done for my final project in the Computer Vision class (EECE 5639) at Northeastern University during Spring 2024.

In this research, the lane detection system is replicated as robust and quick to use in a real-time system without machine learning (for lane detection). Also, the KITTI dataset has been used for research purposes. The code can be accessed here.

Fun Fact: The whole software is powered to detect lanes and segment roads using linear algebra and matrix multiplication. xD

Lane Detection

Lane detection is fundamental in autonomous driving systems, ensuring safe and efficient road navigation. As the pursuit of autonomous vehicles continues to advance, the accurate identification and tracking of lanes have become increasingly paramount. Lane detection enables vehicles to maintain their position within lanes, execute lane changes, and make informed real-time trajectory decisions.

Intuition

Consider a stereo vision camera setup. If we have a constructed 2d frame, and we intend to extract the lane, we will:

- Extract the region of interest (ROI).

- Preprocess the image to remove any noise and distortion in the image signal.

- Apply X algorithm to extract lane information.

Here, the X algorithm is Hough Transform, which is a feature extraction technique commonly used in computer vision. Hough Transform helps us to extract straight lines in an image frame.

Image Preprocessing

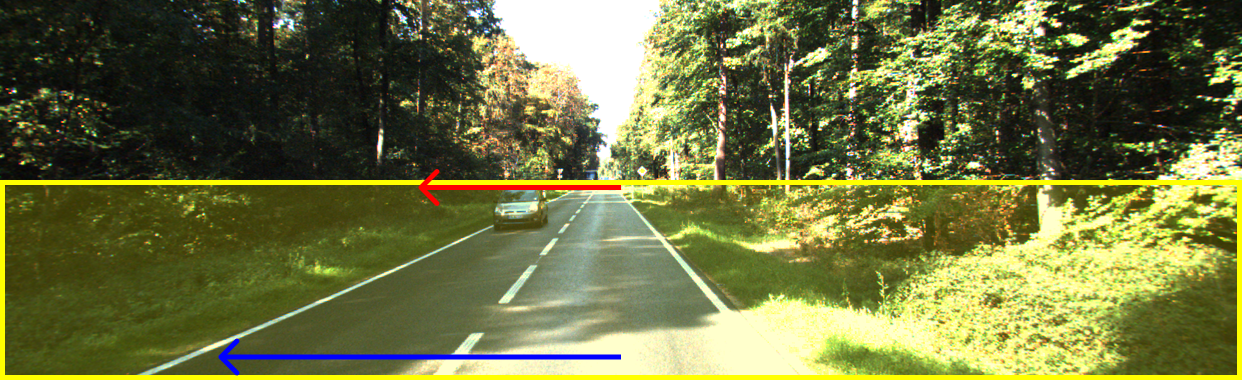

This is the preliminary stage. After reading a couple of research papers on how they preprocessed each image frame, I explored a few techniques. First, the ROI of the image frame is considered. For this step, a few assumptions have been made.

After getting the ROI from the image frame, the color channel is converted to grayscale. Then, gaussian blur is applied (noise reduction) for canny edge detection and then morphological dilation in the image frame to refine and enhance detected lane markings.

Lane Extraction

To extract the lane information from the image frame, I initially used the conventional Hough Transform. This should have worked, but the lane extraction failed. Upon further investigation, I found that I wasn’t choosing only the lane markings from the ROI but also other straight lines in the image frame. These false lines were from the shadows on the road, cracks, and, weirdly, a line between the new road asphalt and the old road (the color difference counted as a line by the canny edge detection).

I tweaked the parameters in the canny edge detection step, yet it was still a failure. Then, I found an article on Medium about using homographies for lane detection. I used this technique first, then preprocessed the image and applied the Hough transform. Voila, it worked; I could concentrate my lane extraction on the road using homography, omitting other objects in the ROI. The lane extraction was 20x accurate than the previous attempt (though it still struggled with the shadows and other objects).

Now, I wanted to make the system more accurate. I first experimented with the blurring techniques provided by OpenCV. I tried using bilateral blur instead of Gaussian blur, which gave me a 2.5x increase in lane extraction. But still, it wasn’t a huge increase in accuracy.

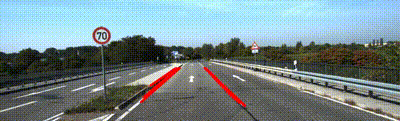

Then, I used the Probabilistic Hough Transform (PHT). This enhanced lane detection by efficiently detecting line segments in noisy images. Unlike the standard Hough Transform, PHT randomly selects a subset of edge points, reducing computational complexity while maintaining accuracy. By probabilistically sampling edge points, PHT focuses on prominent lines, such as lane markings, while ignoring noise and clutter.

After using these techniques, the lane detection system became more robust and could easily and quickly extract the lane markings from an image frame.

The key values can be seen below:

| Precision(%) | F1 | Time(sec) | Memory(MiB) | |

|---|---|---|---|---|

| SEQ_0001 (0:11 @ 10.37fps) | 66.22 | 77.47 | 1.02 | 475.49 |

| SEQ_0002 (0:08 @ 10.37fps) | 78.49 | 86.90 | 0.89 | 435.56 |

| SEQ_0011 (0:22 @ 10.37fps) | 68.52 | 77.69 | 1.17 | 484.00 |

| SEQ_0015 (0:29 @ 10.37fps) | 95.04 | 85.18 | 1.45 | 488.77 |

Road Segmentation

Intuition

The road region must be obtained from the image frame. Hence, the technique to use is image segmentation. In the scope of image segmentation, here, it is semantic segmentation. The ML technique proposed to use was the U-Net algorithm. It is used for segmentation in smaller datasets for high-previse segmentation maintaining speed.

Dataset

The image dataset was used explicitly from the KITTI dataset for semantics. The image dataset was divided into training, validation, and test sets. The split from the dataset is 75% for training, 15% for validation, and another 15% for testing purposes. The total number of images available is 200 (in April 2024).

Before training, the image dataset was augmented to add more information and make the model robust and work well with the new dataset. The model was trained using the U-Net algorithm with the Adam optimizer.

Observation

- The ML model is robust, and the segmentation is accurate. With the new dataset, the model could segment without any overfitting.

- The model’s key results are shown below in the table

| Type | Values(%) |

|---|---|

| Pixel Accuracy | 99.45 |

| IoU Accuracy | 99.35 |

| Train Error | 2.3 |

| Test Error | 3.2 |

Future Scope

The lane detection system marked the line where the curb and the ground met when tested on an image set without any lane markings. This could be said to be a success, but a more robust system is still required in an off-road terrain travel scenario.

Also, the road segmentation system could be made to work in unison with the lane detection system for more accurate results.

References

- “Advanced Lane Detection for Autonomous Vehicles using Computer Vision techniques” by Raj uppala, Medium

- “Hough Transform” notes from the School of Informatics, University of Edinburgh

- “Preprocessing Methods of Lane Detection and Tracking for Autonomous Driving” by Akram Heidarizadeh, arxiv